PlanBlue: Scaling an image recognition AI system to protect coastal ecosystems

AI-driven seafloor mapping –

Geodata for the blue carbon market

Ensuring scalability through better MLOps & improved data infrastructure

Understanding Blue Carbon

Blue carbon refers to carbon dioxide that is captured from the atmosphere and stored by ocean ecosystems. “Blue” indicates here the storage’s association with water. When experts talk about the carbon storage potential of coastal blue-carbon ecosystems, they usually mean salt marshes, mangroves, and seagrass meadows. These ecosystems can store larger quantities of carbon compared to regular forests, in some cases up to 35x more. Monitoring and protecting these ecosystems, therefore, plays an important role in the broader challenge of stopping climate change, but available data is scarce. PlanBlue, a Bremen-based company, focuses on precisely this: mapping of the seafloor for the blue carbon market.

About PlanBlue

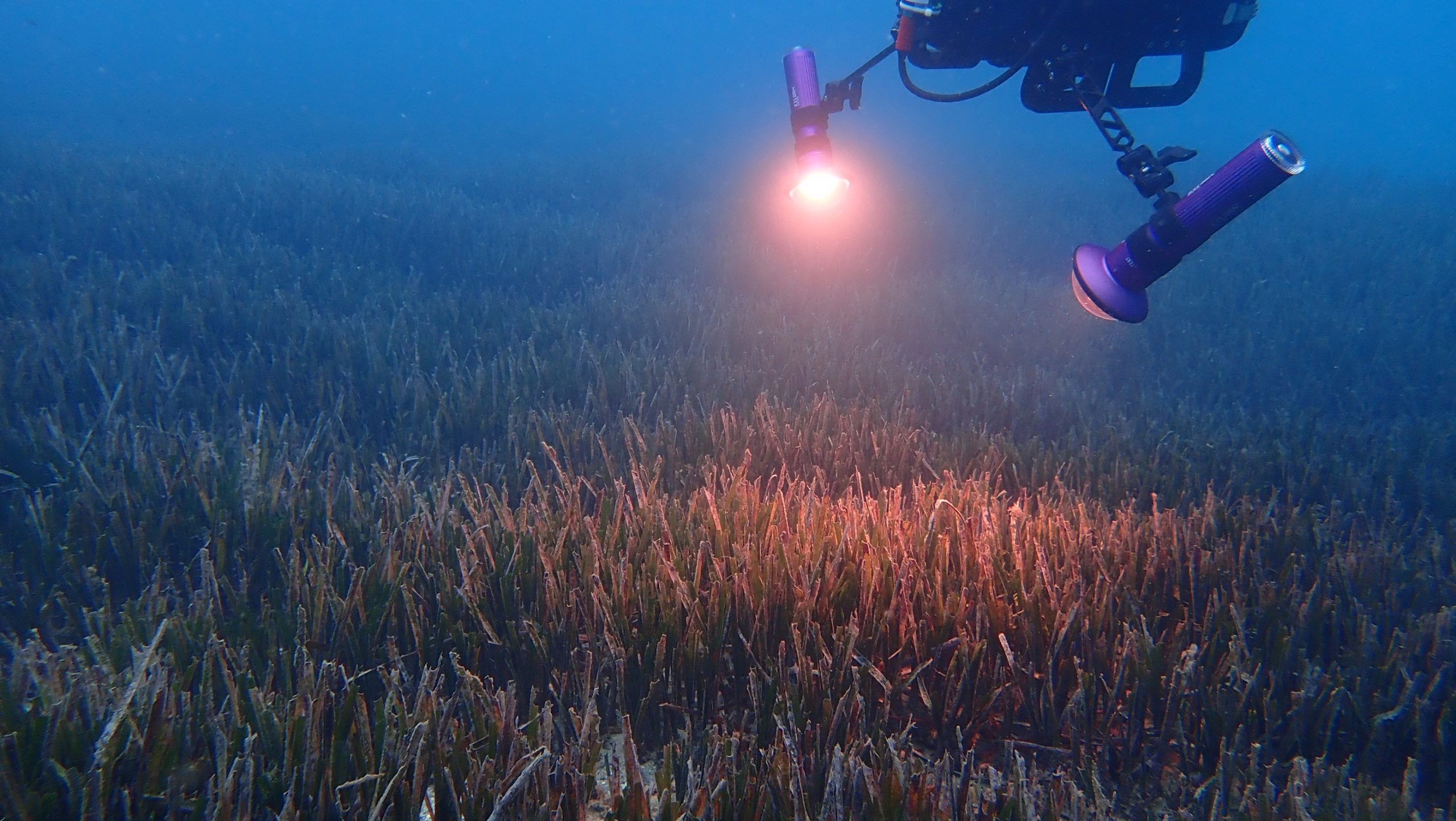

Founded in 2017, PlanBlue uses advanced imaging techniques and machine learning to map and analyze the seafloor in coastal areas. With a hyperspectral camera, a regular RGB camera and a selection of sensors, PlanBlue collects a wealth of data of a specific area. Their core business is the data processing and analysis, to turn this into highly detailed layered maps that provide insights on the health of the ecosystem, it’s carbon sequestration potential and the biodiversity. These data processing pipelines are developed using machine learning.

One of their base data products is orthoimagery of the seafloor. Orthoimagery, which are images adjusted to show true distances and positions like a map, are essential for determining where seagrass grows well, identifying areas that need more protection, and finding the best spots to plant new meadows. Building on this type of imagery data, PlanBlue can add layers of detailed insights, for example of the health state of the seagrass and its carbon stock. PlanBlue employs an in-house team of data and ML engineers who devote considerable time and effort to analyzing the marine ecosystems.

The Challenge of scalability

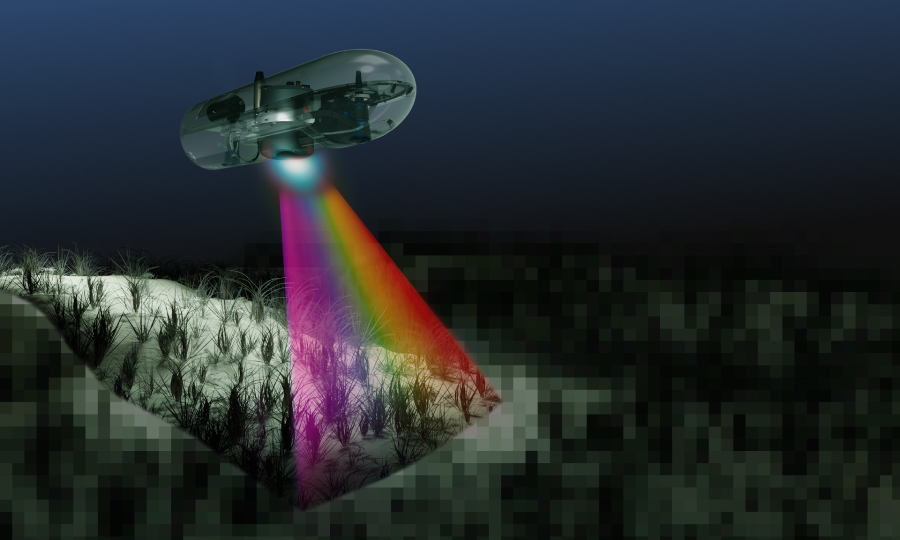

By July of 2024, PlanBlue will have a modified camera system integrated with an autonomous underwater vehicle that will help them significantly increase their capabilities for scanning the ocean floor (see picture below). PlanBlue is planning to expand the scale of their campaigns from a few diver operated kilometers to 4000 km of drone operated coastline. Moreover, a growing data team also means that a more efficient collaboration on algorithms and data is necessary. To address these challenging design tasks, especially in the areas of MLOps and a scalable AI system, PlanBlue decided to hire Enjins.

Identifying bottlenecks during the AI Audit

In a 4-week AI audit, Enjins assessed the performance of the already-developed algorithms of PlanBlue, and focused particularly on what infrastructural changes were needed going forward to leverage the algorithms at scale.

One of the questions PlanBlue came to us with was the effectiveness of their seagrass detection algorithms under various water and lighting conditions worldwide. Our assessment, however, showed that the performance of the respective computer vision models is robust. The audit revealed some other areas of focus to support PlanBlue’s expansion plans:

- The processing pipeline’s capacity needs to be expanded to handle higher data volumes and ensure the stability and reliability of the data management system

- Current manual triggers of processing steps would benefit from automation to keep processing time low and decrease likelihood of errors

- Development of effective MLOps procedures will ensure effective collaboration on development and maintenance in a larger team

As part of the AI Audit, Enjins developed an infrastructure blueprint and a corresponding implementation roadmap to solve these challenges. PlanBlue decided to partner with Enjins to jointly implement the outlined plan over the following months.

”We decided to get an external expert opinion to make sure that we weren’t falling into any pitfalls in infrastructure and/or algorithms that could be easily avoided.

Guy RigotCTO of PlanBlue

Development Phase: Building scalable infrastructure

The development phase following the AI Audit was scoped to address the identified challenges on two levels: First, by setting up an improved data management infrastructure and MLOps platform including new tools and procedures. And second, by preparing PlanBlue’s employees to maintain the new setup effectively and to continuously improve it with inhouse capabilities.

First: Ensuring scalability of the data management system & processing pipelines

Future proofing PlanBlue’s data management system in the cloud

Significantly higher data volumes, running parallel projects, and a larger team collaborating on the data increase the demands on the stability and reliability of the data management system. We had to make sure that after scaling up, the team members could still efficiently and consistently access all seafloor image data. To achieve this, we set up their existing data management system in Terraform and integrated it seamlessly into the new overall cloud setup. Storing image data on S3 buckets and using MongoDB for associated metadata where already in use and we extended their use to other parts of their data processing pipelines, allowing quick, easy navigation and consistent retrieval of image indexes via a Lambda function and other processes.

Wrapping Kedro in AWS batch increases pipeline processing capacities

To address the challenge of significantly higher data volumes, we wrapped all data processing steps into a parallelizable compute function. During the audit, it became clear that one of PlanBlue’s strengths is their consistent modularization of every step of their processing pipeline using Kedro modules. Building on that approach, we wrapped the Kedro pipelines in an AWS Batch function. This setup allows each processing step to be parallelized and automatically scaled in the cloud, significantly increasing pipeline processing capacity.

Second: Reducing reliance on manual triggers in processing pipeline

Introducing flexible task orchestration with Airflow

To address the challenge of the current reliance on manual triggers between the many steps in the pipeline, we suggest implementing event-triggered orchestration of the various processing modules. However, PlanBlue emphasized their need to retain the ability to manually alter or omit processing steps based on data intricacies or client preferences. Hence, we opted for nonconventional use of Airflow to support flexible orchestration based on events rather than on scheduled tasks. With the combined use of Kedro, AWS Batch, Lambda and Airflow, PlanBlue’s processing pipeline can easily scale to meet its future requirements.

Third: Introducing effective MLOps procedures to ease collaboration

Isolating development- & production environments and setting up CI/CD pipeline

We established a stricter isolation of development- and production environments. By creating a dedicated development environment, we enable numerous developers to build and test improved models without risking disruptions to the production pipeline. In combination with their code version control system, Bitbucket, and the setup of a new CI/CD pipeline, testing and deploying code became much easier.

Making infrastructure development & maintenance easier with Terraform

To make maintaining and developing the PlanBlue infrastructure more manageable and standardized, we continued and improved their use of Terraform, an Infrastructure as Code (IaC) tool. This approach allows platform engineers to define and deploy all components of their cloud infrastructure through a single script, streamlining debugging, component addition, and collaboration.

Implementing MLFlow for model tracking & registration to ease collaboration

Another important element for improving collaboration was the implementation of MLFlow as a central platform for model tracking and registration. Every time a new model is trained, MLFlow stores the training procedure, including the chosen parameters and metrics, in a single registry. This central source simplifies reviewing colleagues’ work, building on their findings, and identifying the best model. Previously, the process required actively gathering results from various sources and consulting multiple people, which does not scale well with a larger team.

Scaling up infrastructure for sustainable growth & innovation

Significantly enhancing PlanBlue’s AI & data infrastructure and processes over the course of 4 months was mainly possible through close collaboration between Enjins and PlanBlue. In the end, all changes were made with the in mind who will be working with and maintaining them. This way we ensured that the PlanBlue inhouse AI team can maintain and further scale the AI system in the future – thereby contributing to their mission: providing comprehensive insights into seafloor health to help slowing down climate change.

Follow us on LinkedIn!

Follow us on LinkedIn! Check out our Meetup group!

Check out our Meetup group!