How you shouldn’t do ML

The explainability of complex systems is a topic which fascinates me. What is a good explanation? And to whom? And how do we keep control on complex systems of algorithms, without killing innovation? One of the newest techniques is called counter factual; the name already reveals its working. In this article, I won’t spend too much time on explainability itself (though would love to), rather I would like to use counter factual to explain how you shouldn’t approach an ML project.

I worked a lot for relatively small, entrepreneurial organizations – like start-ups and SME – as well as large, highly regulated organizations. It often strikes me more how large the differences can be between the first and the latter. And it often has to do with one thing: the fear for risk.

It doesn’t really matter if we talk about large municipalities, the tax office, health insurers or large financials, all of them deal with heavy compliancy, the risk of reputation loss and high fines, with many eyes watching them: killing creativity and leading to a graveyard of development and ML projects. The fear for risk might actually be the biggest risk itself.

In a theoretical world…

Imagine the following: a pandemic broke out on the other end of the world. It spreads wide and quickly around the globe. We close shops, restaurants and kill large chunks of our economy. One thing is clear; we need to innovate ourselves out of this situation: we need to develop a vaccine. But what about all the rules, the regulation, the risk we take with people’s lives? Fair point.

The government decides: we need to do everything to develop a vaccine. And we need to do it safe, of course. They start to contract and hire smart pharmacists and pharmaceuticals. They also start to create a set of rules which the vaccine should comply to before roll-out. It should be at least 80% effective. It should be properly tested, but not on humans of course, that would be unethical without proper testing. And the vaccine shouldn’t discriminate either, it should work equally well for men and women. Lastly, the performance should be good: at least 80% effectiveness

Months go by, but the smart pharmacists make progress. A first version seems to work pretty well, it’s promising. The vaccine is built on top of the newest techniques, and other pharmacists are enthusiastic about it. The vaccine is about 72.3% effective, but will probably increase in the upcoming months. We will soon be out of this situation!

In the meanwhile, Belgium did decide to start with the inferior vaccine. They say to keep on developing and improving while also starting to vaccinate small portions of their population. What an unethical morons, we know better.

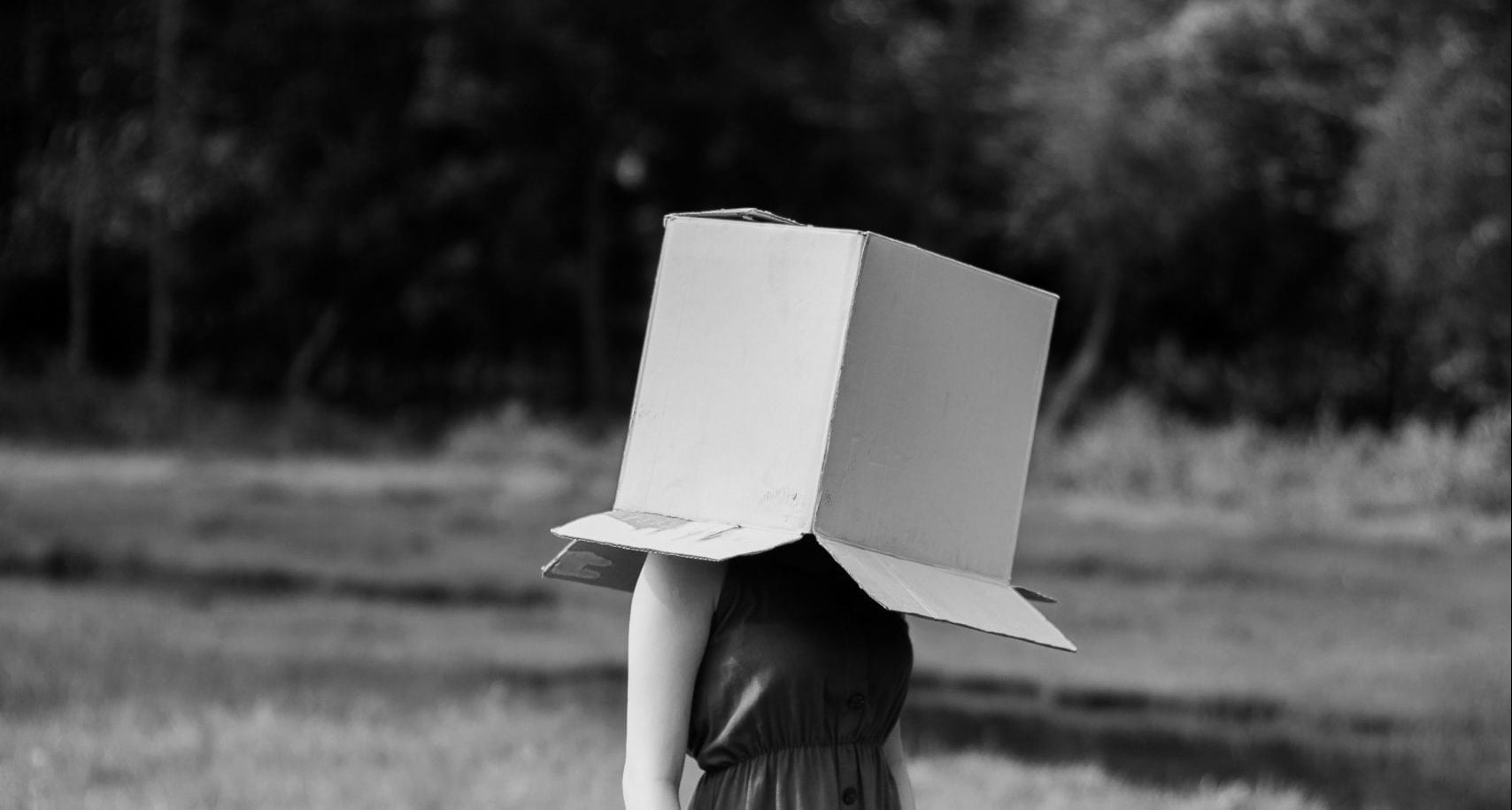

Belgians, testing a vaccine for humans on actual humans. So unethical! Fortunately we know better!

Several months pass, and indeed the effectiveness is increases until the point it hits 80.2%. It’s time to test the vaccine, but of course not on humans. Dead pigs should be sufficient. In the end we are almost identical anyway.

During the test, the effectiveness seems to be a little bit over estimated, which means: back to the drawing table! Months pass by until a new version got available. And it passes the test on dead pigs! That means all requirements are met, we can schedule vaccination in just a few weeks!

The government decides to search for a manufacturer. Of course one who has experience with the newest technique. That turns out to be harder than earlier thought, and more expensive. That’s a bummer, the money to fund the production of the vaccine is in the end the money of all of us. Under pressure of society, the government decides to organize a tender, which is won by the cheapest manufacturer. Unfortunately the manufacturer needs 6 months to produce all vaccines. Six months. Six entire months.

A year passes by in order to get everything ready. The economy got a big hit, but the day that everyone can be vaccinated comes closer. Finally, the vaccine is ready to be operationalized! Next Friday is going to be the big day.

The day turns out to be a disaster. It’s a mess. Not enough doctors, people show up in the wrong hospital, the vaccines are on the wrong locations or simply not delivered, basically nothing works as planned. Even worse, we can’t get control on the numbers: we don’t know how many people were vaccinated, when and by who. And we can’t monitor the side effects either. We operate completely blindly.

Days pass by, and unfortunately, some people get sick and a few even die. It turns out to be mostly young women. How could this happen? We tested over and over… Are dead pigs and living humans a bit wider apart after all?

Fortunately, we have our dashboard to monitor infections. At least those should decrease, right? But they don’t. Of course, the virus mutated a little over the past few months, but that shouldn’t affect the effectiveness too much right? Well, as turned out years later: it does. The virus of 2 years ago behaves much different than the virus today.

In the meanwhile, Belgium iterated over time, productionized by design and finished the pandemic years before us, with less casualties, a better vaccine and a stronger economy.

Now replace virus by data and vaccine by model

Too lame and silly? Well, to be honest, if you replace virus by data and vaccine by model, you unfortunately come pretty close to the (traditional) way of doing ML at many highly regulated organizations:

- An extensive set of rules in a complex framework, but no proper design

- A theoretical model – with theoretical performance – but no real life testing

- Long development cycles, but no eye on a chancing world (concept drift)

- Extensive tuning, but the step to production is often discussed too late

- Lots of testing, but very few control (monitoring) when exposed to the outside world

- Very complex models, which we then can’t explain in production

I don’t say you don’t need rules and regulations. But what strikes me is the fear for risk which ultimately leads to worse models, problems in production, big bang deployments and very slow iterations when finally in production. As with the example above; it cost us so much than fast iterations, progressive deployments, real-life testing and focus on operationalizing. We can learn a lot from innovative scale-ups, from agile DevOps teams which are nowadays also operational al large organizations. We should learn ourselves to approach ML projects in a modern, iterative and more flexible way, with eye for end users rather than theoretical rules.

The fear for risk might actually be the biggest risk itself.

Please fill in your e-mail and we'll update you when we have new content!

Follow us on LinkedIn!

Follow us on LinkedIn! Check out our Meetup group!

Check out our Meetup group!