JustWatch: The Movie Recommendation engine – an AI engineering dream

Working on an AI engineering dream: maturing the JustWatch movie recommender

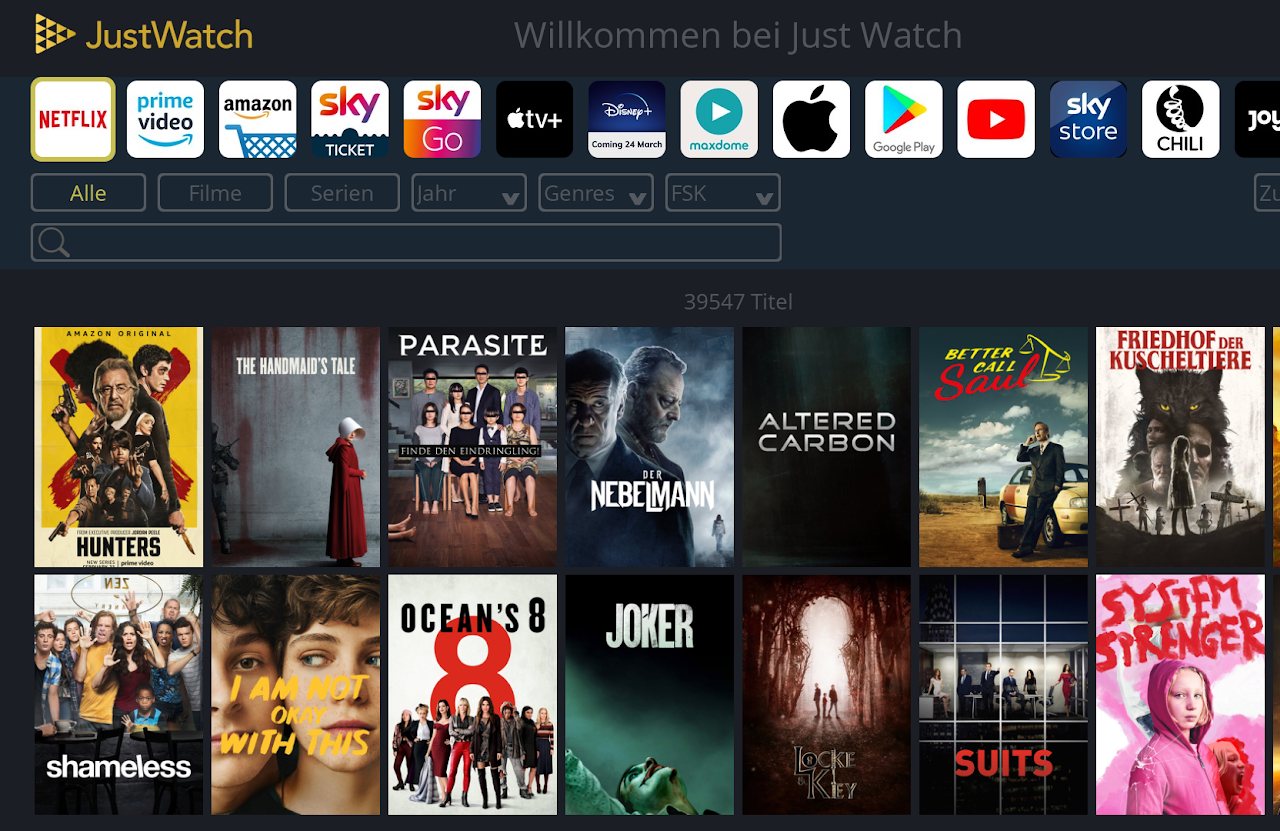

JustWatch is an international movie marketing startup and marketplace. Every day, millions of viewers use JustWatch on their app or desktop to find movies and TV shows they’ll love. For users this means JustWatch shows where you can legally watch a movie or TV show (i.e. Netflix, Prime, etc.). Users often come back to JustWatch to find their next movie or series. This means movie recommendations are a key aspect of the JustWatch platform. And considering the rich database that they have, any AI engineer in this world would just love to build a recommender on this.

About JustWatch

JustWatch was founded in 2014 in Berlin, by a strong team of 6 experienced founders. The technical responsibility lies with Dominik Raute, CTO of JustWatch.

Dominik, what made you decide to co-found JustWatch & can you share how your startup journey started?

First off, thanks for the opportunity to work together on this project: Collaborating with Enjins has been an absolute pleasure so far. This has really been a journey on eye level with lots of deep and interesting technical discussions from either side, a refreshing break from the kind of collaboration I used to have with other agencies.

I’ve started my own journey on the technical side as well, working my way up through technical support in an AdTech venture. Working directly with customers has been a great learning experience, a way of seeing the business side of technology that has stuck with me ever since. Which also started to get me interested in the topics of user behavior and information relevance, which later followed me through search, recommendations and discovery. After that, I had a short gig in VoD, evolving the technology architecture of the largest user-generated VoD portal in Germany back then.

A few months into that, David approached me about co-founding JustWatch by putting both my AdTech and VoD experience together, and that’s when JustWatch was born. We made it to the biggest streaming guide in the world around 2020, and since have been working on improving movie discovery and recommendations, building on our own first-party data.

”Building inhouse, with the acceleration of a partner like Enjins that brought in expertise from mutliple recommendation projects, was our go-to strategy.

Dominik RauteCTO of JustWatch

The Holy Grail: Movie Recommendations

Considering the business model of JustWatch, different iterations and improved versions of a movie recommender system clearly already existed in the architecture of JustWatch.

Dominik, can you explain the importance of movie recommendation in the JustWatch context?

Sure! Thanks to the marketing expertise in our founder round, we quickly built a strong SEO channel that provided a self-sustaining pipeline of people coming to JustWatch, who wanted to know where to watch their movies and what was new on their providers. The main challenge back then was — and in a way still is to this day — to keep users come back more often because they trust JustWatch with coming up with great content for them to watch. Since our main business model is on the B2B side, we also started using our first versions of the recommender to improve our industry-leading movie marketing campaigns on Meta or Youtube for example. This increased the importance of building a very capable recommender system at the core of our B2B and B2C discovery efforts.

Solving 3 Key Challenges: Building a mature taste-based movie recommender

In the first quarter of 2023 Enjins and JustWatch decided to join forces to further mature and accelerate the recommendation technology of JustWatch.

Dominik, can you explain in a bit more detail which challenges had to be solved in the next iteration?

Our old recommender was based on collaborative filtering and was performing still well when we approached Enjins. However, given that the initial recommender technology was multiple years old and considering how fast data science progresses we were curious how much it could be improved by now. Specifically, we wanted to tackle the following challenges.

- Cold start problem: The old recommender learned about the titles on our platform purely based on previous interactions. For titles that are added to the JustWatch platform very recently the recommender would therefore encounter the ‘cold start problem’. For these titles, not enough interactions have been seen yet to make good recommendations.

- The popularity bias: Relying on user-title interactions as the only source of information for recommendations means that the popularity of a title biases the recommendation a lot. Generally, recommending popular titles more often is not bad. However, sometimes there are niche movies that barely anyone knows that would be an even better recommendation for you because they hit your particular taste in movies exactly. To create that kind of suggestion, the recommender would have to look beyond the user-title interaction. The recommender needs to understand what identifies your taste in movies and TV, and use all the available information on the title, like genre, themes, actors or even plot descriptions, to fill that need. That is something our old recommender was not capable of.

- Efficiency: Finally, we serve so many people every day and recommend titles from such a huge corpus of titles that efficiency really pays off. The existing tech-stack for serving these recommendations on-line was reaching its limits, and we were aware that with modern innovations in vector search algorithms a more cost-effective solution should be possible.

Luuk, from Enjins’ side you lead the project together with the in-house software engineers and data scientists at the side of JustWatch. What were your first impressions when joining JustWatch?

The no-nonsense attitude and technical know-how from Dominik and his data team allowed us to quickly go in-depth, leading to interesting weekly discussions of state-of-the-art machine learning models. The team of engineers at JustWatch really live the “fail fast, fail often” mentality, meaning that we were able to roll-out new experiments and A/B tests at a very high speed. Combine that with an innovative tech-stack and strong awareness of recent ML developments and you have a company that is not afraid to constantly reinvent and improve themselves.

Dominik, why did you decide to go for a build (on your own infra) rather than a buy / off the shelf solution?

Recommendations are at the very core of the value we provide as JustWatch. Since we have very rich data inhouse to learn from, but are also aware of the iterations needed to get something useful and promising, I personally don’t believe in an off-the-shelf solution here considering the complexity we have to solve. So building inhouse, with the acceleration of a partner like Enjins that brought in expertise from mutliple recommendation projects, was our go-to strategy.

The Solution

In December 2023 a first big release was done of the new recommendation system, what where the most important components here?

- Two-tower model: The most fundamental change was the switch of the model’s architecture to a flexible two-tower model. This enables the recommender to consider not just which titles a user interacted with but additionally understand what characterizes each title. This approach leverages JustWatch’ detailed data about titles such as its themes, release date and actors. And we also included more nuanced information like a textual plot description, or a films poster; basically, all the data a human would also look at to make an informed watching decision.

- Leveraging metadata: This metadata really helped to mitigate the ‘cold start problem’ since most of these features are available immediately at title release. Thus, titles that haven’t been interacted with yet can still be recommended based on taste alone. More early recommendations lead to more interactions which in turn leads to a well-rounded understanding of the title, thus, increasing recommendation quality further.

- Finding the balance between popularity and niche titles: One of the main topics of experimentation was balancing the recommender between recommending popular well-liked titles and interesting niche titles that users might not have heard of before. This is a delicate balancing act, because as recommendations become more niche the average production value of recommended titles surely goes down, however, it increases the chance to find a ‘hidden gem’ – i.e. a title that is perfect for you but so niche that you would never have found it by accident.

- Modern MLOps stack and way of working: We implemented modern MLOps procedures to facilitate model experimentation by implementing model tracking and registration as well as pipelines automated nightly retraining and model deployment. Additionally, we were able to save on infrastructure costs by serving the recommendations using efficient Approximate Nearest Neighbor search.

Go Live: How to compare an old vs new recommender?

Dominik, which metrics were most important for JustWatch to understand if the improved version of the recommender was actually doing better and which results did you see?

There is not one simple answer or metric here that does it all, we needed to look both offline as well as online if recommendations made sense qualitatively and quantitatively:

- We are all movie-nerds: The most intuitive might be that at JustWatch we are all movie-nerds. So, when a few colleagues and me check the recommendations for our favorite movies it already paints quite the picture. That way, for instance, we realized early on that for some less well-known titles the recommendations from the old recommender were still better, giving us feedback for our model experiments.

- Offline metrics for experimentation: I really enjoyed though that Enjins immediately placed great focus on quantifying the quality of the recommendations to make models more easily comparable. This allowed us to very quickly experiment with new modelling techniques. However, these offline metrics are only a proxy for what will happen when the model is live in production, especially in the case of a recommendation model.

- A/B testing: Once in production, A/B testing is the best way to test the overall effect of a recommender. Especially since web traffic on JustWatch is sometimes hard to interpret. For instance, typically, better recommendations lead to more user engagement and more interactions. However, a really good recommendation may lead to less user interactions if the first recommendation already struck gold and satisfied the user. Isolating the different effects can be tricky. By running a series of A/B tests we were able to verify that the model indeed outperforms the existing recommender on all the important metrics by X. And while it might not be the most exciting, the operational costs of serving the recommendations is of course also relevant when running a business, and these also went down significantly, using the new recommender system.

Luuk, going live with complex AI systems is usually not trivial. What where specific challenges in this case?

The old recommender had problems with cold-start and performance at scale, but was otherwise very robust. The new model is more nuanced, but also introduces more complexity. To avoid doing one step forward and two steps back, we needed to do a lot of testing to make sure that the new recommender outperforms the old in all situations. This entailed qualitative checks by domain experts as well as developing quantitative KPIs that could be used as metrics and tracked during training. No matter how many tests you construct during development though, the quality of recommender systems is best evaluated online by whether people enjoy using it.

Recommendations for recommenders

JustWatch is not the only company that believes in an internal recommender to leverage data. What would your #1 recommendation be to other companies that start this journey?

Dominik: I’m a big believer in simplicity. When we started out with recommendations, the industry was way less mature. In 2018, we just pulled something battle tested off the shelf (Ben Frederickson’s “implicit” library with some custom tooling). Since we did have a treasure trove of historical data, the first iteration of the recommender was already surprisingly good. These days, I would probably try something managed first, such as Vertex Recommendations or AWS Personalize.

We hit our roadblocks mainly when looking at the edge cases: Implicit biases in our data, model drift, or solving cold start. The last 20% proved difficult to achieve, and that’s where iterating with the experts at Enjins proved helpful.

Luuk: Start thinking very early on about measuring the results of the recommender in a production setting. While qualitative evaluations and off-line metrics can help a lot to experiment quickly, nothing beats seeing the results in production, when users start interacting with the model. What also really helped in this case is the shared enthusiasm between the Enjins team and JustWatch for movies. This helped the discussions of qualitative results and created a strong drive to keep trying new things to get those perfect hidden gems out of the model.

Follow us on LinkedIn!

Follow us on LinkedIn! Check out our Meetup group!

Check out our Meetup group!