The Enjins Chatbot Experiment

Generative AI has gained significant attention due to its profound impact across various industries, its potential to disrupt jobs, and the growing concerns surrounding privacy in relation to AI-driven solutions. However, there has been limited discussion regarding the feasibility of building and operationalizing generative AI solutions. At Enjins, we strive to remain at the forefront of technological advancements by not only exploring opportunities and assessing their impact but also by taking practical action and exploring the feasibility side of these new innovations.

To this end, we have embarked on building multiple proofs-of-concept (PoCs) that serve as inspiration to our clients. For us, these PoCs serve as a means to investigate both the potential opportunities and the practicality of implementing generative AI solutions. While we are unable to disclose specific details about our clients’ PoCs, we can share an instance in which we acted as our own client, where we developed our very own Enjins Chatbot. Meet Enjenius.

Enjenius is an interactive chatbot that encapsulates comprehensive information about Enjins. It encompasses our vision regarding data and AI, successful strategies for building data and AI solutions, the industries we have actively engaged with, and more. We fed Enjenius with publicly available information. Note: we have ensured that client-specific data was excluded as we care about the confidentiality of our customers. Additionally, we incorporated our internal strategy document and research findings obtained through our evaluation of diverse Software-as-a-Service solutions.

Anyone (or well, at the moment at least anyone within Enjins) can engage with Enjenius by posing questions and exploring the depth of our expertise, gaining a deeper understanding of who we are and what we do. In this blog post, we aim to provide a detailed account of how we successfully implemented this chatbot (and hence the feasibility side of generative AI), shedding light on our approach through a set of takeaways.

Enjenius — A copy of Enjins

The objective was to develop a chatbot equipped with extensive knowledge about Enjins, showcasing valuable use cases across various industries, and providing insights into building an in-house data and AI capability successfully. This chatbot was designed to offer information on AI applications, elaborate our vision and values, and even offer machine learning advice tailored to specific problems. We have been surprised by the coherence of the chatbot’s responses. It goes beyond mere copy-pasting of text as it merges and present information in its own unique language.

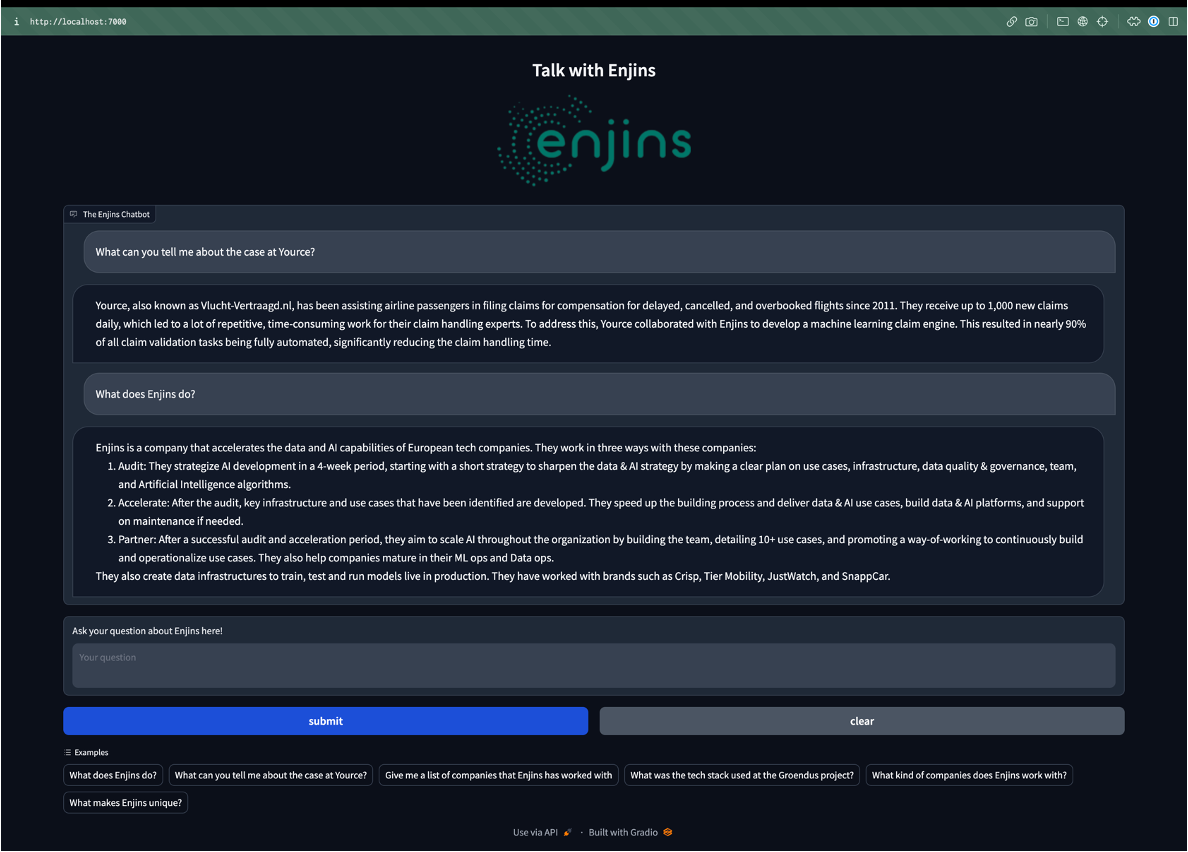

Some examples:

“What does Enjins do?”

Enjins is a company that specializes in machine learning and data-driven solutions. They work with businesses to perform audits on their current data state, develop and implement machine learning infrastructure, and help improve the overall capabilities of their products. In the context provided, Enjins worked with Wunderflats to develop a ranking engine that led to a significant increase in booking requests and helped balance the supply and demand of the marketplace.

“what can you tell me about the case done at Yource?”

Yource, also known as Vlucht-Vertraagd.nl, is a company that helps airline passengers file claims for compensation related to delayed, cancelled, and overbooked flights. They receive up to 1,000 new claims daily, which led to repetitive and time-consuming work for their claim handling experts. To address this issue, Yource collaborated with Enjins to develop a machine learning claim engine. This resulted in the full automation of the claim validation task for nearly 90% of all claims, significantly reducing the claim handling time.

Behind the scenes of Enjenius

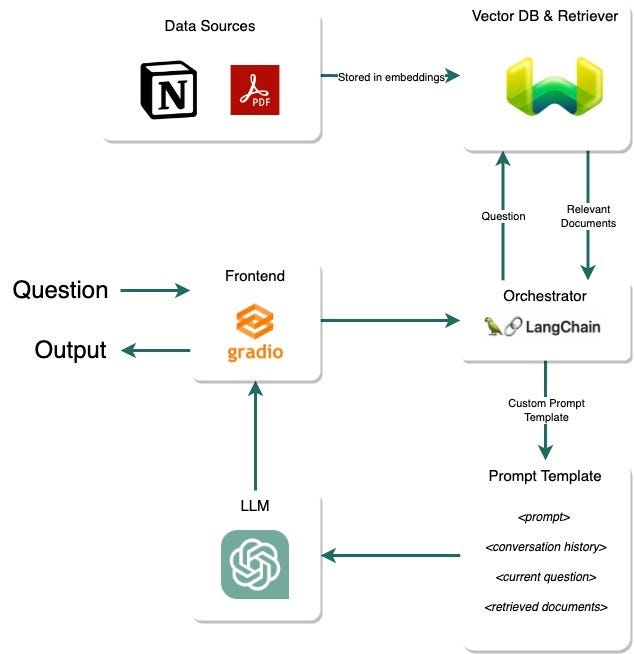

Here, we would like to share a bit more on how this PoC is implemented. Enjenius is built using Langchain, OpenAI, Weaviate & Gradio. We have talked about Langchain before, in our blog of implementing Large Language Models [1], but we since we are a big fan, we will mention it shortly again.

Takeaway 1: Langchain is and will be your go-to

Langchain [2] has a large range of functionalities, including agents, custom tools, information retrieval, and chatbot functionalities. It has received a lot of attention in the last couple of months and with good reason. Langchain is a fast, open-source framework that lowers the barrier to productize LLMs. Because of the wide range of features, Langchain can also be a bit overwhelming at first, but it is worth the effort. The implemented bot depends on a couple of functionalities of Langchain, including chat history and a Q&A chain with multiple retrieval sources. It is expected that the features Langchain offers will be improved and expanded in the near-future, as Langchain is the trendiest web framework of 2023.

Takeaway 2: Validate truthfulness constantly

LLMs have the tendency to hallucinate, which means the model is outputting false information while sounding very convincing. Especially when you fine-tune a model, it can look like it is giving reasonable answers, but they might be false. Additionally, fine-tuning a model is a costly task where quality data is needed. Therefore, we chose to implement retrieval augmentation, a nice way to make the model know more about Enjins without finetuning. This did sometimes confused Enjenius, where it mixed up Enjins with Enjin, a product ecosystem built around Blockchain and NFTs. But by better explaining within the retriever tool that it knows everything about Enjins, that did solve the issue.

Takeaway 3: Find the right vector databases, being a crucial element for every LLM solution

The data comes from multiple sources, including the Enjins website and a specifically written document to further support the chatbot. This information then needs to be retrieved in a smart way and provided to the model. For this the Chatbot we chose Weaviate [3], an open source cloud vector database provider that has advanced retrieval technologies for syntactical and semantical search. All data is split into smaller chunks and saved into the database. When the user asks something, the LLM will choose which of those chunks is most relevant and will then ask Weaviate to perform a search based on the question and type.

When we started, we had setup a ChromaDB. This was easier to setup, because it, for example, did not ask for a class schema definition when uploading data. But for this concept, we saw that ChromaDB did not always retrieved the most relevant documents. Therefore we switched to Weaviate, where the retrieved documents were a lot better.

Takeaway 4: Inference speed is a challenge

At this point, the bot is still quite slow with response rates up to 20–30 seconds. We used the OpenAI implementation within Langchain, but this was unfortunately quite slow sometimes.

To deploy, we used two approaches. First, as a huggingface Space, which is a free, fast, and easy way to deploy small ML apps. But we also deployed it to a Google Run instance using a Docker. By doing this, you bypass the queue that exists in Huggingface Spaces, which does make it a little bit faster. In the future, we want to dive into how to improve the response time of the chatbot by optimizing the way we use Langchain and looking into solutions like Langchain-Serve. We also want to improve the custom input prompt as this will make it fit better into certain other use cases.

If you are interested in experimenting with different generative AI proof of concepts, let us know. Happy to explore both the impact side as well as the feasibility side. Feel free to send a mail to bmaassen@enjins.com.

[1] https://enjins.com/beyond-gpt-implementing-and-finetuning-open-source-llms/

Please fill in your e-mail and we'll update you when we have new content!

Follow us on LinkedIn!

Follow us on LinkedIn! Check out our Meetup group!

Check out our Meetup group!